Compiling a working Linux Kernel for Confidential VM

In the recent months, I’ve been attempting to make a custom disk image to deploy onto Confidential VMs (CVM) on different cloud providers, mainly Azure, AWS and GCP. At the start, in order to get things moving quickly, I chose to use the kernel which I found from booting Ubuntu 24.04 images on Azure and GCP’s CVM implementation1. The reason for this was because custom out of tree patches are needed to support both Intel TDX and AMD SEV-SNP, as not every patch had been merged into the kernel at the time when I started looking at this. In fact, different parties continue to maintain various out of tree patches for their implementation until everything is merged into the mainline linux kernel, such as tdx-linux and canonical/tdx. In my understanding, the patches add a mechanism for the guest and host to communicate since the memory of the guest is encrypted, in addition to adding a device that can be used for the guest to request an attestation report from the host.

However, once I got all the basic components up for a working disk image, I decided that I wanted to compile my own kernel for the following reasons:

- It’s simply easier to maintain the implementation if I can use the same kernel for all 3 cloud providers.

- I had also written a custom out of tree kernel module. And in order to compile this module, I had to keep two separate VMs with the specific two kernel versions, which was rather troublesome.

- I was running into an intermittent booting issue on AWS, where there seems to be some sort of race condition which might lead to APIC not being handled correctly during boot. Using a newer kernel might avoid the issue, since I don’t know what sort of patch would fix the issue.

- Compiling my own kernel meant that I had the freedom to use a kernel as new as possible, which was important as there are consistently various patches submitted in order to fix any underlying bugs, or to add new features, related to the CVM implementations. The latest patch I’d seen so far was as new as 3 weeks ago.

I thought that it would be a relatively simple affair - I simply took a base kernel config from a GCP CVM, enabled HyperV related configurations, and started compiling kernel 6.15.2, and used it in my custom disk image, expecting it to just work. However, frustratingly, while it booted and initialized everything fine on AWS and Azure, I was unable to successfully initialize the vTPM on GCP. With some kprintf debugging, I found the exact line of code where the error occurs with the ioremap (although I didn’t understand the ioremap failure until much later).

As kernel 6.11 was the version I last used in GCP, I compiled it, hoping that it would work on GCP, and sure enough, it did. But frustratingly, 6.11 fails to boot on Azure’s TDX CVM instead as it’s unable to initialize the CPU hardware correctly. In particular, this was the error message I got:

[ 0.813174] acpiphp: ACPI Hot Plug PCI Controller Driver version: 0.5

[ 2.796024] mce: CPUs not responding to MCE broadcast (may include false positives): 0

[ 2.796024] Kernel panic - not syncing: Timeout: Not all CPUs entered broadcast exception handler

I decided then and there that there were 2 issues I needed to really understand:

- What’s causing my TPM to fail to boot on GCP?

- What’s causing my CPUs to fail to initialize on Azure TDX CVMs?

In order to narrow down the cause of the first issue, I decided to compile kernel 6.12 to see when the issue could have been introduced. But moving on to kernel 6.12 was where it broke again. With the help of ChatGPT, I found this Linux Kernel Mailing List article which explained the issue. What is essentially happening is that all MTRRs are initialised as WB, but some devices like vTPM requires UC, which caused the ioremap to fail, and thus the vTPM driver does not initialize correctly. I had to read it multiple times to figure out what was happening, before I finally concluded that the commit, 8e690b8, caused the issue. By commenting out this line and re-compiling 6.15.2 and testing, the issue finally went away. I figured that there shouldn’t be an issue commenting this out for now, since there’s still no good fix for this MTRR initialization problem, and it’s not like this patch was used in the kernel before 6.12 in any case.

For the second issue, finding the minimal patch to solve the problem was much harder. ChatGPT suggested these 2 discussions: First, the AP startup issue on SEV-SNP and second, the wakeup mailbox issue. However, several things didn’t quite line up in my head:

- I had no issues booting an SEV-SNP VM on kernel 6.11 on Azure, so why was it suggesting a patch related to SEV-SNP?

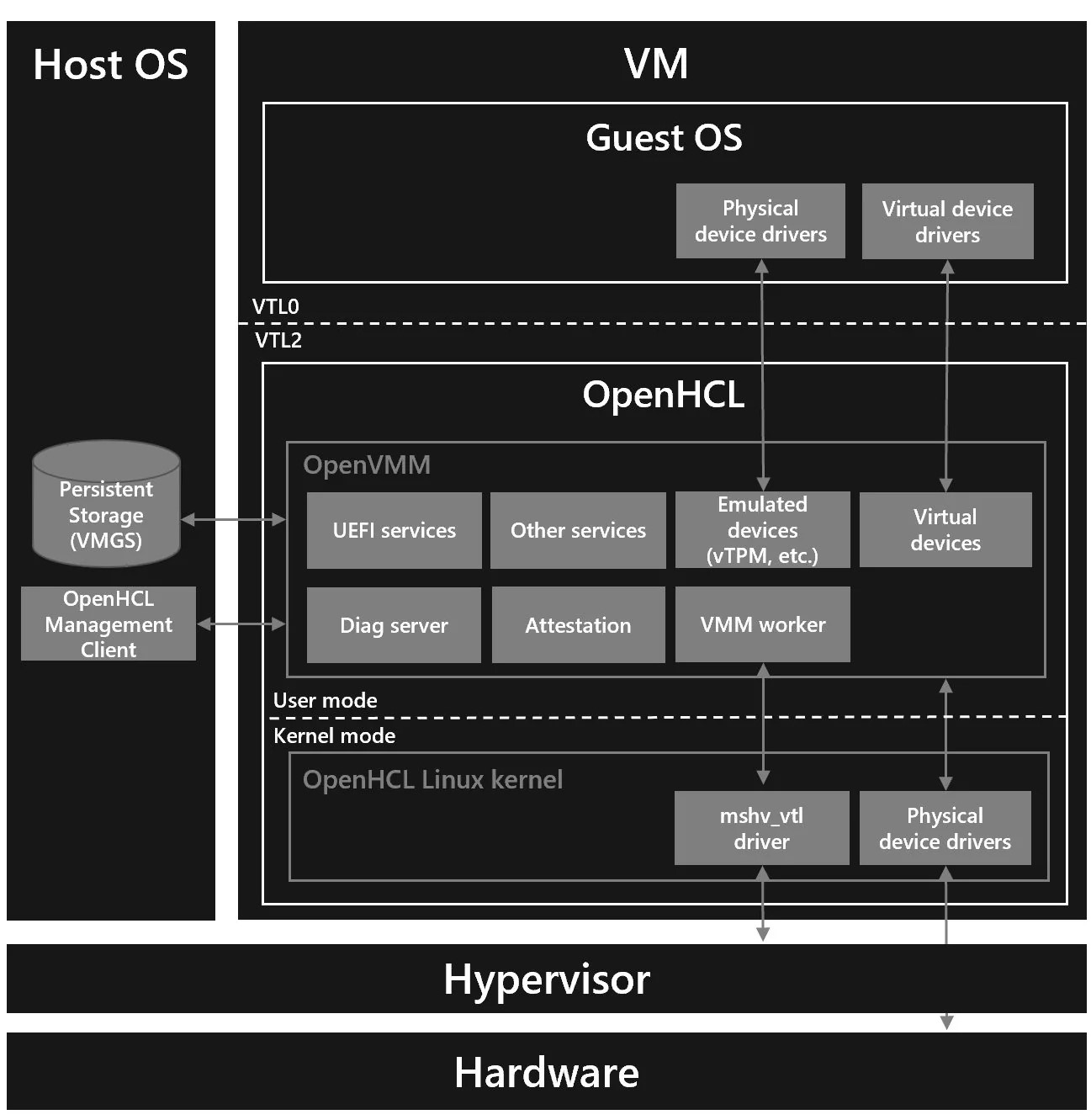

- The wakeup mailbox issue should not be a problem we have to deal with because we’re running a typical VTL0 guest - although it was surprising for me to learn that the VTL2 guest needs to boot CPU via device tree, something especially rare on x86 architecture.

- My third reasoning had to do with my understanding on Azure’s architecture for Confidential VM. In Azure, Confidential guests are booted via a paravisor developed by Microsoft, called OpenHCL, which runs at VTL2. It seems like a rather elaborate architecture, but I believe that this was done so that guests can run un-modified and un-enlightened to Confidential VM extensions, allowing users to gain the benefits of Confidential VM without requiring them to port their workloads to a more modern environment, especially since on GCP and AWS, the guest needs to at least be on kernel 6.8 or newer. However, given that I had issues booting my own compiled kernel 6.11 on Azure, it seems that it may not exactly be possible to use an unmodified kernel.

In any case, pulling these 2 patches into my 6.11 branch did not solve the issue. Unfortunately, I didn’t have the time to delve too deeply into trying to solve this problem completely, so this is where my exploration ends. I’m fairly certain that the issue was solved somewhere in kernel 6.14.

Was this little exploration was all doom and gloom? Not quite - I had been a little out of touch of working with Linux Kernel code for a few years now and had somewhat forgotten some tricks that I could use to find my way around the codebase much more easily.

The first is, how do I quickly find commits which may be somewhat useful or related to what I’m exploring? Apparently, git log --grep "...." was my best friend. Git is so powerful, and there’s always so much things that I didn’t know it could do. I’ve also found git bisect to be really useful in several debugging scenarios, not that I used it this time.

The second: What’s the quickest way for me to backport a patch? ChatGPT had initially suggested using b4, how to pull patches from the mailing list or even using patch. But I quickly realised that I ran into some issues which made it difficult for me to debug what went wrong with applying the patch. But eventually, I figured that since the patches are already somewhere in the git tree, and I’m already using the linux kernel from git.kernel.org, I might as well use git directly instead. I ran git log to find part of the commit message, copy its commit hash, then use git cherry-pick to port it to a specific branch I wanted, fixing any conflicts and compilation issues along the way.

Last but not least: Compiling linux kernel has been a big bugbear for me since it takes around an hour for me to compile it. I quickly noticed that a lot of it was due to loadable kernel modules which I wasn’t using anyway, since I’m only using around 20 of those modules in my disk image. All I had to do was change the build config of over 2000+ entries from CONFIG_XXXX=m to CONFIG_XXXX=n with a simple replace operation, and only turn on the config for the 20+ modules I needed, and the kernel updated the build for me accordingly. It saved me a lot of time after that since the compilation only took somewhere between 10-15minutes, which was much more palatable. One day, I’ll get around to having a slightly more minimal kernel config so I can shave off even more of the compilation time.

-

I ended up sharing the same kernel for AWS and GCP because GCP’s kernel config already had the Elastic Network Adapter (ENA) driver compiled as a kernel module, which I needed to enable networking on AWS VMs. However, Hyper-V related config had not been enabled in that build, requiring me to use a different kernel build for Azure VMs. ↩